Artificial intelligence : entering a socio-technological tornado

Artificial Intelligence (AI) is one of those technological revolutions that shake, amaze, disrupt and worry society. Everyone is talking about it, and sometimes using it without even realizing it. But to understand this technological whirlwind, we need to delve into the intricacies of artificial intelligence...

By Reine Mbang Essobmadje, CEO of Evolving Consulting

The term « artificial intelligence », coined by John McCarthy and abbreviated to « AI », is defined by its creator as « the science and engineering of making intelligent machines, especially intelligent computer programs ».

Artificial intelligence is a set of technological processes used to simulate human intelligence. In other words, AI is the set of machines and software based on algorithms that help both individuals and businesses in their daily lives.

For the uninitiated, this term encompasses everything and nothing. In fact, it is difficult to conceptualize or materialize the abstract, your neurons, etc. So, to better understand artificial intelligence and its applications, it is important to first understand the different types of algorithms used and the types of artificial intelligence.

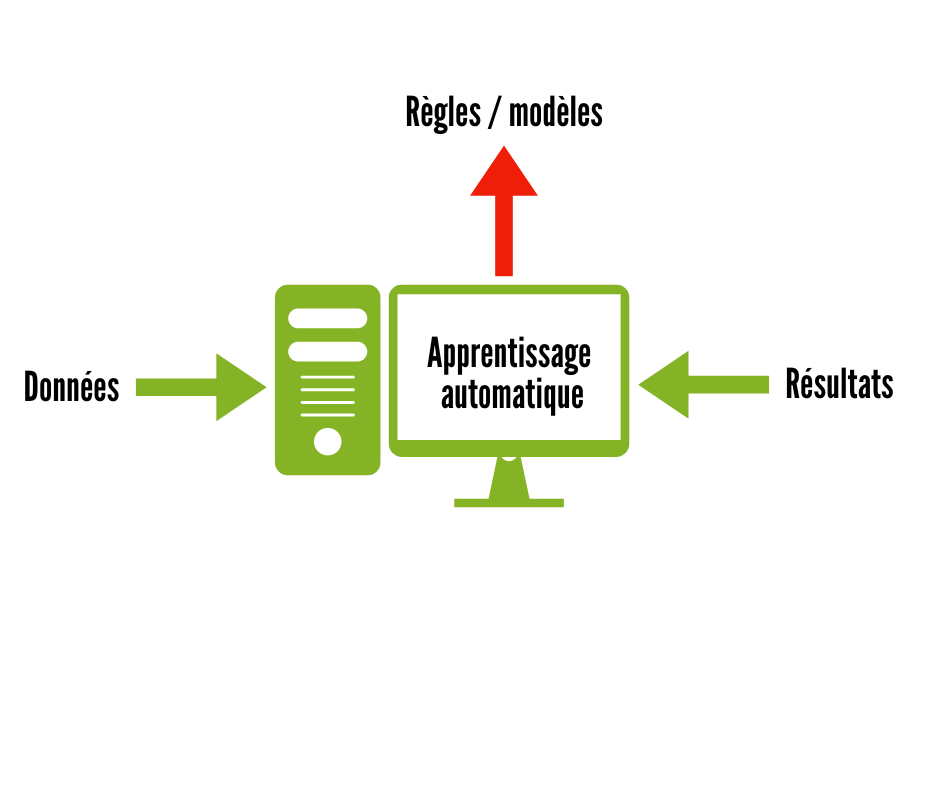

AI is based on machine learning.

(Creating rules and models from data and results) and computational thinking.

Machine learning was defined in 1959 by its pioneer, Arthur Samuel, as « the field of study that gives computers the ability to learn without explicitly being programmed « . It is an approach based on statistical analysis that enables computers to improve their performance based on data and to solve tasks without being explicitly programmed to do so. Deep learning is a machine learning technique. It is an automatic learning method inspired by the nervous system of living beings. Deep learning algorithms process the information they receive in a similar way to how our neural networks respond to nerve signals.

A simple example is our smartphones: if you regularly type slang words into your phone, it will add them to its knowledge base and define your rules and models to better anticipate what you are saying.

Computational thinking is concerned with solving problems, designing systems or even understanding human behavior by drawing on the fundamental concepts of theoretical computer science. Computational thinking is the synergy between human creative intelligence and the information processing capabilities of information and communication technologies.

It is based on tools, techniques and concepts Decomposition, abstraction shape recognition and algorithmic reasoning).

• A technological wonder

Artificial intelligence (AI) increases the potential tenfold, with a speed that is unique to machines, enabling large computers to produce large amounts of information and data in record time. It has applications in a wide range of sectors:

In transport: autonomous vehicles will make driving more comfortable, safer and more environmentally friendly thanks to virtual driving systems, high-resolution maps and optimized routes.

🡺 In agriculture: AI combines climate management, soil analysis, disease detection and hygrometry to improve crop yields.

In finance: AI enables fraud detection, KYC (know your customer) and regulatory compliance, among other things.

In marketing and advertising: AI can be used to create audio, video and text content.

🡺 In healthcare: AI systems can help diagnose and prevent diseases and epidemics, and speed up the discovery of treatments, in addition to democratizing self-monitoring tools.

In justice: AI is being used to manage crises and assess the risk of recidivism. It is also increasingly used in the fight against cybercrime.

AI is emerging as an essential link in achieving SDG 4 (Sustainable Development Goals), which aims to « ensure equal access to quality education for all and promote opportunities for lifelong learning ». The infinite possibilities of AI are a guarantee of education for all, including in areas that have been educationally disadvantaged.

– Growing use

Tech Jury’s AI statistics show massive adoption and growing use:

▪ The size of the global artificial intelligence market has been estimated at $136.6 billion by 2022.

The global AI market is expected to reach $1,811.8 billion by 2030.

AI will add $15.7 trillion to global GDP by 2030. The global adoption rate of AI has steadily increased and is now 35%.

▪ Investment in AI startups has increased sixfold since 2000. AI could increase business productivity by 40%.

▪ 77% of the devices we use already have some form of AI.

▪ 97% of mobile users use AI-powered voice assistants.

AI is making its way into our daily lives, gradually replacing functions performed by humans, resulting in the loss and disappearance of knowledge. Most importantly, it is changing our society. What’s more, it brings with it its own set of risks, particularly those associated with:

🡺 Intellectual property, the essential information bases used by artificial intelligence tools. What about the copyright and intellectual property rights of all the elements used by artificial intelligence tools? Is this software enriched by the content provided by the different users? What kind of licenses could be granted to these artificial intelligence software products? Clearly, we need to address the issue of regulation to ensure that intellectual services and knowledge remain the property of their authors and that these authors are cited whenever their work is reused.

Cybercrime: The many uses of deepfake could pave the way for cybercrime leading to identity theft and usurpation, and cyberharassment….

– Towards a social divide?

Artificial intelligence is inexorably leading to the disappearance of certain jobs, replaced more efficiently and quickly by machines. According to a report by the McKinsey Global Institute, AI is expected to replace around 15% of the global workforce, or around 400 million workers, by 2030.

Could AI surpass humans? Could AI surpass man? In a context where artificial intelligence tools are becoming increasingly reliable, certain professions will have to evolve, resulting in thousands of people losing their jobs. So how do we manage the competition between machines and humans? What kind of society are we heading towards when machines compete with humans? How can we organize these different balances to allow for harmonious co-existence and, above all, personal fulfilment in an age where mental health is an increasingly important issue and the pressure to perform is exacerbated by this competition? Clearly, each country should consider the introduction of AI according to the societal challenges it faces…

What about bias?

Computer science is unique in that the majority of algorithms are developed by men, who make up more than 75% of the sector’s workforce. We already see a range of biases in different software, tools or applications, and this could tend to increase with AI (which would be enriched by a biased social and cognitive model), with less ability to mitigate these risks, and therefore a slightly wider gap between men and women, between the digitally literate and the digitally illiterate populations. Illiteracy in the age of AI is a blemish, where the machine is able to produce much more, much faster and better than humans.

Potential misuse of AI:

AI poses a non-negligible threat to cybersecurity. This includes, but is not limited to:

– Deepfakes: the creation and distribution of fake video or audio content created by an AI

– Hacking into autonomous vehicles: taking control of a vehicle in order to carry out a terrorist attack, for example

– Hacking into electrical, logistical or systems or infrastructures

– Dissemination of false information (identity theft, cyber-bullying) – Violation of human rights

– Infringement of intellectual property rights

– …

Illustrated by the case of ChatGPT

ChatGPT (Generative Pre-trained Transformer) is one of the most widely used artificial intelligences (more than 100 million users in the two months following its launch).

Who owns the intellectual property rights of the content generated by ChatGPT?

The question of who owns the content generated by ChatGPT is the subject of legal debate. Article 3(a) of OpenAI’s general terms and conditions provides for the transfer of rights to generated content to the user (https://openai.com/policies/terms-of-use). In principle, this means that articles, blogs, books and other texts generated by ChatGPT belong to the user. Indeed, the user may use this content for any legal purpose, including commercial purposes (sale, publication), provided that he or she complies with the OpenAI General Terms and Conditions of Use (article 3(a)). Similarly, responsibility for the content rests with the user, who must ensure that it does not violate any applicable laws, let alone OpenAI’s Terms and Conditions. However, OpenAI’s Terms and Conditions prohibit the presentation of data generated by this AI as the result of human work. Similarly, the use of automatically generated content must comply with OpenAI’s Usage Policy, which prohibits the use of ChatGPT for any purpose that is unlawful.

Precedents for the use of AI

Given the novelty of the issues surrounding AI, legal precedents relating to its use are relatively rare. However, in the United States, attorney Steven Schwartz was brought before a judge after using incorrect information generated by ChatGPT. In particular, he cited non-existent court decisions provided by the AI in court. This raises questions about the reliability, veracity and integrity of the data when ChatGPT’s sources and databases are unlisted or unknown.

Will the AI be enriched by user content?

The application is also used to correct texts and translate documents …. We can naturally assume that the AI is enriched by this content. But what about the intellectual property of the original creator, who will have used ChatGPT for translation purposes only? Can we imagine a Creative Commons type license? Creative Commons licenses are a set of licenses that regulate the conditions of reuse and distribution of works.

– What is the legislation?

A few examples from around the world

In Europe: Under the aegis of the European Commission, Member States have proposed a regulation on AI to better regulate these technological innovations and promote their ethical and responsible use. Following the European Commission’s work, the European Parliament has committed to adopting the first international legal text on AI (https://www.europarl.europa.eu/news/fr/headlines/society/20230601STO93804/loi sur-l-ia-de-l-ue-premiere-reglementation-de-l-intelligence-artificielle). However, the legal framework for AI is not yet the subject of a binding legal text. However, there are a number of non-binding initiatives aimed at promoting ethical rules for AI (charters, codes of conduct, best practice guides, guidelines).

In the United States: Despite discussions on the legal framework for AI, no binding standard has yet been adopted in the United States.

In Africa: DRC and Republic of Congo

The Democratic Republic of Congo is one of the first African countries to take an interest in developing AI. At present, the DRC has no legislation in this area. However, in its National Digital Plan, the country promotes the launch of projects in artificial intelligence, augmented reality, robotics, home automation, nanotechnology and bionics (intelligent prostheses, augmented humans).

There are at least a few non-binding instruments, notably the Asimov Laws on Robotics, which remain the benchmark for AI regulation in the DRC (https://paradigmhq.org/politiques-de-lintelligence artificielle-et-de-prise-de-decision-automatisee-en-rd-congo/?lang=en).

✔ Since 24 February 2022, the Republic of Congo has been home to Africa’s first Artificial Intelligence Research Centre (ARCAI). Funded by the Economic Commission for Africa (ECA) and a number of partners, ARCAI’s overall aim is to reduce the digital divide by helping Africans gain access to digital tools.

In general, AI is not yet subject to a strict and binding regulatory framework, although many countries are in the process of enacting legislation. For the time being, most of the existing instruments are not really legally binding. There is therefore an urgent need to provide a framework for AI-related activities, which very often lead to numerous abuses.

5. What new social and societal model?

Artificial intelligence makes it possible to produce or improve content, but also to transform it. AI is penetrating all sectors of activity, from marketing to robotics, education, health, finance and agriculture.

There’s a lot of talk about deepfakes, where we’re able to age a face, alter a face, alter a video, to create scenarios that don’t exist.

Are we ultra-protected or ultra-exposed?

We can’t help but talk about the increased risks of cybercrime, identity theft and cyber harassment that can result from poor legal oversight or misuse of artificial intelligence tools. In fact, we need to think hard about all the ethical issues surrounding AI.

Given that Africa is lagging behind the rest of the world in terms of digital technology, we naturally wonder about the risks associated with this avalanche of solutions, products and technologies that could undermine a society that is still being built, where the majority of the population is young, tech-savvy and open to the world. It therefore seems essential to have a real debate on the use of AI specifically in Africa, on the benefits it could bring in terms of climate change, agriculture and even improving education, against the risks it poses to a society that lacks reference points, is unstructured, fragmented and sometimes in rebellion against institutions. Technological tools will only find their place in a society where people are educated to use them responsibly and intelligently to improve everyone’s daily lives, leaving no one behind.

🡺 How can we regulate an evolving technology? The fundamental question, therefore, is how to regulate AI to prevent harmful uses and ensure an operating framework that does not violate the rights of content producers on the internet. We have seen with major technological developments such as mobile money and other issues that it has taken time to implement appropriate regulation. The opportunities presented by AI are infinitely greater compared to issues such as digital finance, personal data management and 5G, and therefore require real consultation between stakeholders to ensure the rights and well-being of everyone online.